From OR to EMR: Informed Consent’s Rocky Transition to Data

Hackable Part 4

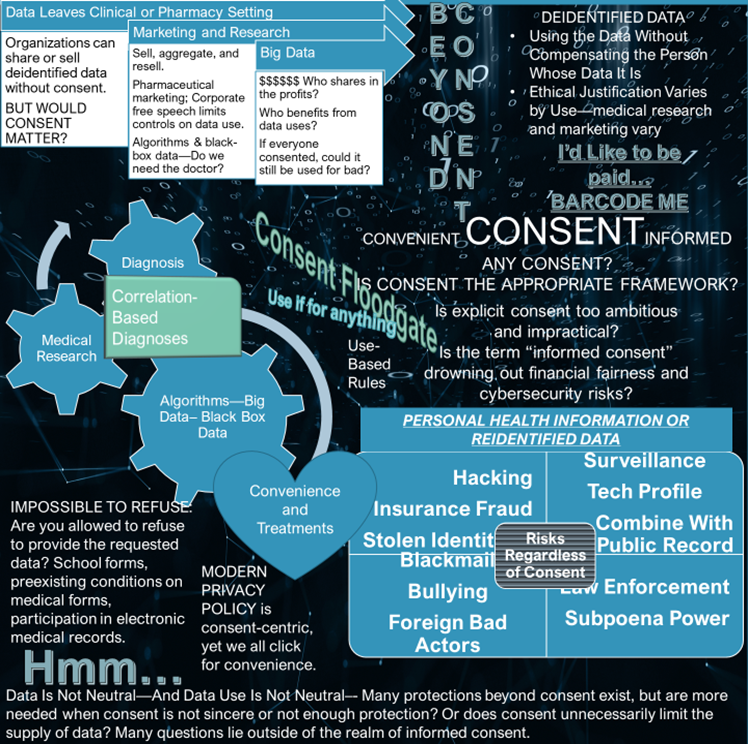

A hyper-focus on informed consent as the primary tool to ensure autonomy represents some lapses in the field of bioethics. To me, informed consent is more valuable in traditional clinical care or medical research than in engagement with big data. Yet consent is the operational tool behind widespread data collection and the prevailing framework for big data. Consent opens the floodgate to permissible data uses and leads to vulnerability to both nonconsensual and unexpected uses. Perhaps informed consent is shifting responsibility, making people bear risk for what should be a corporate responsibility.

Informed consent seems like something that acknowledges people’s freedom to choose and is presented as person-centric or empowering. I question that assumption and look at the role of consent in making a system work conveniently and easily, chronicling a permission that may be used as protection from liability, yet not always in the interest of society, or of the person seeking care or whose data is collected. Rather than being consistently mutually beneficial, informed consent in the context of medical and wellness data may be a convenience connecting records to insurers, allowing for electronic medical records as well as search histories and purchasing preferences that indicate health conditions, and feeding large pools of aggregated data. Informed consent can protect the body from unwanted touch but is not as adept at protecting people’s data. That is, informed consent alone is insufficient to empower people in the collection and use of data, yet hospitals, radiology centers, web-based applications and ecommerce, and practitioners ask more of it than of other principles.

In the OR

The term “informed consent” emerged in the 1950s. The concept arose from much earlier cases where people seeking medical care were subjected to bodily intrusions to which they did not consent. See for example, Mohr v Williams (surgeon operated on left ear when plaintiff had agreed to surgery on the right), Pratt v Davis (nonconsensual hysterectomy), and Schloendorff v Society of New York Hospital (nonconsensual hysterectomy). In Salgo v. Leland Stanford, Jr. University Board of Trustees (1957), the court first used the term” informed consent”. Canterbury v Spence (1969) took a patient-centered view of informed consent, requiring disclosures of certain risks. Doctors must include small risks of severe harms, yet, to be disclosable, risks must be known. Informed consent became the assurance that doctors must respect patient autonomy, a concept arguably eroded by competing interests and limited in certain circumstances like emergency medicine. Yet it was around well before modern technology and big data. It did not come from a tradition of protecting data. It arose from a tradition in the doctor-patient relationship having to do with bodily acts. Is the same old informed consent enough to protect consumers, especially consumers of healthcare, in light of data mining?

Informed consent is a valuable first-line protection, but it is more meaningful in surgical or clinical settings when the subject of the consent is the medical procedure or medicine than when used for data collection, high-tech radiology or digitized results, or electronic medical record storage, where model language exhibits its role in preventing liability.

Consent as a Quid Pro Quo

As noted in a post about voluntariness, to be realistic, consent should be viewed as a condition of participation rather than as fully voluntary. Consent is a quid pro quo and may bar future complaints—it is seen as a waiver. It is an entry fee to receiving medical care, to being able to use certain websites, and to access the healthcare system in its current form.

Electronic Medical Records (EMR), Images, and Big Data

For example, I will give you consent if you will give me an MRI. When the consent form says my data will be shared with an insurance company and used for medical research, I sign in exchange for the MRI. The person may assume the medical research is secure, in-house, and limited to deidentified data, and it may be. The medical research may be added to public research databases, access to which is often password protected. In this case, let’s say it is a brain MRI. It is possible to identify people from MRIs using facial recognition technology. Consent, while it may be fully informed or somewhat informed based on the person’s understanding of technology, does not prevent identifiable imagery or digitized faceprints from making their way to less secure and even global research portals, and eventually, in a deidentified format, to data miners. It also does not prevent genetic discrimination based on the results as the mere collection of the data may create a vulnerability. Ultimately, consent does not control data very well.

In the data context, the consent merely leads to collection and storage of data in ways governed by other policies, laws, and practices. As a side note, HIPAA also was not designed to protect data as much as to create rules surrounding the portability and accessibility of data. In medical ethics, the good of the data (“beneficence”) is the ability to learn more about people, diseases, and health. Rather than viewing privacy alone, the medical research community sometimes weighs risks to privacy against the benefits that may derive from the data with an eye to maintaining deidentified secure pools of data. Many privacy laws as computers were developed focused on certain characteristics, distinguishing personal data from nonpersonal, anonymous, or deidentified data. Resolutions concerning data privacy often are dual purposed. For example, the Madrid Resolution of 2009 approved by the International Conference of Data and Privacy Protection Commissioners aims to protect personal data and facilitate the flow of personal data “in a globalized world.”

Breaches Operate Outside of Informed Consent

In medicine, if a person consents to surgery understanding the risks, and one of the predictable risky events happens, the consent may serve as an appropriate waiver of the right to sue, or even to complain. Well, you knew the risks. Even when consent seems involuntary and is viewed through the lens of access to care, the realistic quid pro quo, it is a good ethical cornerstone in medical care. Informed consent’s liability protection may end where medical malpractice occurs. In cybersecurity, even if the person knows some of the risks, there is reason to question the ethics of how the person is interacting with the technology, and how vulnerable society and people are to harms that exist outside of consent.

The data world is larger than the operating room. The risks are of a totally different nature from the physical risks, or mental and emotional risks of physical harms from medical care. The onus should not be on the person consenting.

Data and Non-Bodily Harm

The harms that exist regardless of informed consent can be categorized as illegal acts, accidental or negligent data leaks, and financial injustices. Privacy categorically cuts across all three.

The Illegal

First, some are actual inappropriate and illegal actions like hacking, ransomware attacks, foreign adversaries using data for strategic advantage, extortion plots, identity theft, bullying, and stealing by hacking bank accounts and financial data. Ransomware attacks could hurt those seeking care as hospitals may be unable to see encrypted medical records, something potentially dangerous for people experiencing medical emergencies. Hacking is nonconsensual — it operates outside of consent. Consent at the point of collection creates the vulnerability. Cybersecurity is arguably protective, yet we observe crippling effects of ransomware attacks or data breaches fairly often. Assuming that everything is hackable would make people reconsider what information they share and how. Maybe this “hackable assumption” would reel in certain behaviors and highlight a lapse in privacy law and policy. That is, people’s permission, or explicit informed consent, is not enough to protect them.

The Mistakes

Second, unintentional data leaks or inadvertent security lapses by medical systems, hospitals, or insurers may expose people’s personal data and financial data that hospitals collect. Employees may save data in a non-secure way or temporarily put data on a phone without someone’s consent. Leaks that reflect purchases or search history may also divulge personal medical issues and would not be protected by privacy laws specific to health care. Consent does not allow poor storage, yet it is not powerful in preventing it.

The Unfairness

Third, there is financial unfairness when industry profits from marketing and advertising using the data. With or without consent, the profit problem exists. In the medical setting, the informed consent paperwork does not mention that the person signing would be waiving the right to compensation for the use of the data, the deidentified data, or even a faceprint, which is not deidentifiable. It is just assumed that any piece of the data would be given by the person seeking health care for free, that the hospital can use it for research with consent, and will sell what it is permitted to sell, and that MRI or other radiology businesses, pharmacies, online stores or website owners, medical businesses, biotech firms, and device sellers can sell more broadly to data miners as they are less constrained by regulation. In Barcode Me, I assert that I would like compensation for my data.

Key to Solutions

Privacy Enforcement Occurs After the Damage

The law covers privacy problems both by punishing after the fact and by requiring efforts to protect the data. The HIPAA “Privacy Rule”, created to meet the Fair Information Privacy Practices, is enforceable various ways. For example, if personal health information is leaked, the Office of Civil Rights (OCR) can fine wrongdoers. The Department of Justice (DOJ) also has enforcement authority. And the Federal Trade Commission (FTC) can enforce. Most enforcement options concern “deceptive” or “unfair” practices yet there have been many fines for software flaws and security failures. But the enforcement solutions do not protect people in advance and often “best efforts” are not enough. The principles behind privacy laws could fill in where informed consent stops protecting. Laws that balance portability, data sharing, and convenience do not account for people’s rights as well as principles of fairness, transparency, and use and collection limitations would.

Higher-Level Public Policy

At a higher level, regulations and best practices could alter incentives that encourage data resale and overuse at many levels. For example, pharmaceutical companies’ desire to purchase prescriber identifying information for marketing, especially for detailing from data miners may not be ethically justified—and if marketing and advertising were regulated more stringently, the data would become less valuable to them, disincentivizing its purchase. (Although, for now, free speech protects some marketing tactics.)

Also, laws surrounding liability influence consent. I would assert the consent should not preclude a cause of action concerning data. Holding organizations to cybersecurity standards could lead to improved technology, innovations in the cybersecurity space, and an increased role of collection limitation to reduce risk of liability. People consenting to collection, use, or storage are not really taking the risk in a meaningful way—they merely are asking for participation in the care, radiology, or purchase, or they are wishing to share information for medical research. Of course, they expect best efforts to keep data safe. Consenting to data collection, storage, and use does not do the same task as consenting to surgery. Much data collection that involves health and wellness, especially in the online ecosystem of purchase and search history, could be governed without the “cookies” nonsense. Rules about data collection could govern, allowing people to freely use websites (generating profits for businesses) and guaranteeing them that no extra data is collected, or that it would not be sold. The same holds true for the quasi-medical consumer businesses like radiology outlets or pharmacies. Companies could purchase people’s data explicitly. That is, the whole thing could be turned on its head.

Thoughts

People and the population at large do not have a perfect avenue for protection from those harms to which consent is irrelevant. As a matter of the general welfare or national security, reimagining informed consent would be valuable considering its failure to operate neatly outside of traditional medicine and scenarios that involve the body. The framework of consent for the collection and use of machine generated images, data, purchase history, and electronic medical records is insufficient. It is clunky and allows a universal shrug—you consented, you accepted cookies, you submitted the school form, you permitted the use for research. Other laws pick up the pieces after a breach. Principles like collection and use limitations may need to be pushed to the forefront of data ethics and operate without a simple waiver. Once the door to participation is opened by “informed consent”, a body of protections should be in place that elevates the other principles, especially financial fairness. The aggregated, sold, and resold data is a vulnerability. And it is unclear that consent would make any difference.

Photo 125171698 / Doctor © Sergey Tinyakov | Dreamstime.com